Citation Information

- Author(s): Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin

- Title: Attention Is All You Need

- Journal/Source: 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA.

- Publication Year: 2017 (latest revision: 2023)

- Pages: Not specified

- DOI/URL: arXiv:1706.03762v7

- Affiliation: Google Brain, Google Research, University of Toronto

Audience

- Target Audience: This paper is intended for researchers and practitioners in the fields of machine learning, artificial intelligence, and natural language processing. It is especially relevant for those working on neural networks, sequence modeling, and machine translation.

- Application: The intended audience can apply the knowledge from this paper to develop more efficient and effective models for tasks such as machine translation, text summarization, and other sequence-to-sequence tasks.

- Outcome: If the intended audience applies what they learn, they can achieve state-of-the-art results in various sequence modeling tasks with reduced training time and computational resources.

Relevance

- Significance: This paper is highly significant as it introduces the Transformer model, which relies solely on attention mechanisms, eliminating the need for recurrent or convolutional networks in sequence transduction models. This represents a paradigm shift in neural network architectures for sequence processing.

- Real-world Implications: The research can be applied to improve the efficiency and accuracy of machine translation systems, text summarization tools, and other applications that require modeling of sequential data. The Transformer model’s parallelizable nature makes it particularly useful for large-scale implementations.

Conclusions

- Takeaways: The core conclusion of the paper is that the Transformer model, based entirely on self-attention mechanisms, outperforms traditional recurrent and convolutional models in sequence transduction tasks. It achieves state-of-the-art results with significantly reduced training times.

- Practical Implications: The paper suggests that the Transformer model can be practically applied to a wide range of sequence modeling tasks, offering a more efficient and scalable alternative to existing models.

- Potential Impact: If further research is pursued, the Transformer model could revolutionize the field of natural language processing by providing a more efficient and effective framework for various applications, potentially extending beyond text to other modalities such as audio and video.

Contextual Insight:

- Abstract in a nutshell: The Transformer model is a new architecture for sequence transduction tasks that relies solely on attention mechanisms, eliminating the need for recurrent or convolutional layers. It achieves superior results in machine translation and generalizes well to other tasks.

- Abstract Keywords: sequence transduction, attention mechanisms, Transformer model, machine translation, parallelization

- Gap/Need: The paper addresses the need for more efficient and scalable models for sequence transduction tasks, overcoming the limitations of recurrent and convolutional networks.

- Innovation: The innovative aspect of the paper is the introduction of the Transformer model, which uses self-attention mechanisms to compute representations of input and output sequences without relying on recurrence or convolution.

Key Quotes

- “The dominant sequence transduction models are based on complex recurrent or convolutional neural networks that include an encoder and a decoder.”

- “We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.”

- “Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train.”

- “Our model achieves 28.4 BLEU on the WMT 2014 English-to-German translation task, improving over the existing best results, including ensembles, by over 2 BLEU.”

- “We show that the Transformer generalizes well to other tasks by applying it successfully to English constituency parsing both with large and limited training data.”

Questions and Answers

- What is the primary innovation introduced by the Transformer model?

- The Transformer model introduces a sequence transduction architecture based entirely on self-attention mechanisms, eliminating the need for recurrent or convolutional networks.

- How does the Transformer model perform compared to previous state-of-the-art models in machine translation?

- The Transformer model achieves superior results, with a BLEU score of 28.4 on the WMT 2014 English-to-German translation task and 41.8 on the English-to-French translation task, outperforming existing models by a significant margin.

- What are the benefits of using self-attention mechanisms in the Transformer model?

- Self-attention mechanisms allow for greater parallelization, reduced training times, and the ability to model dependencies regardless of their distance in the input or output sequences.

- How does the Transformer model handle positional information without recurrence or convolution?

- The model uses positional encodings, which are added to the input embeddings to inject information about the relative or absolute position of tokens in the sequence.

- Can the Transformer model generalize to tasks other than machine translation?

- Yes, the Transformer model generalizes well to other tasks, such as English constituency parsing, demonstrating its versatility and effectiveness beyond machine translation.

Paper Details

Mind Map of the Paper

Purpose/Objective

- Goal: The primary aim of the paper is to introduce the Transformer model, a new architecture for sequence transduction tasks that relies entirely on self-attention mechanisms, and to demonstrate its superiority over traditional recurrent and convolutional models.

- Research Questions/Hypotheses: The central research questions include whether self-attention mechanisms can replace recurrence and convolution in sequence transduction models and whether such a model can achieve state-of-the-art results in machine translation and other tasks.

- Significance: The authors felt this research was necessary to address the limitations of recurrent and convolutional networks, particularly their sequential nature, which hinders parallelization and increases training times.

Background Knowledge

- Core Concepts:

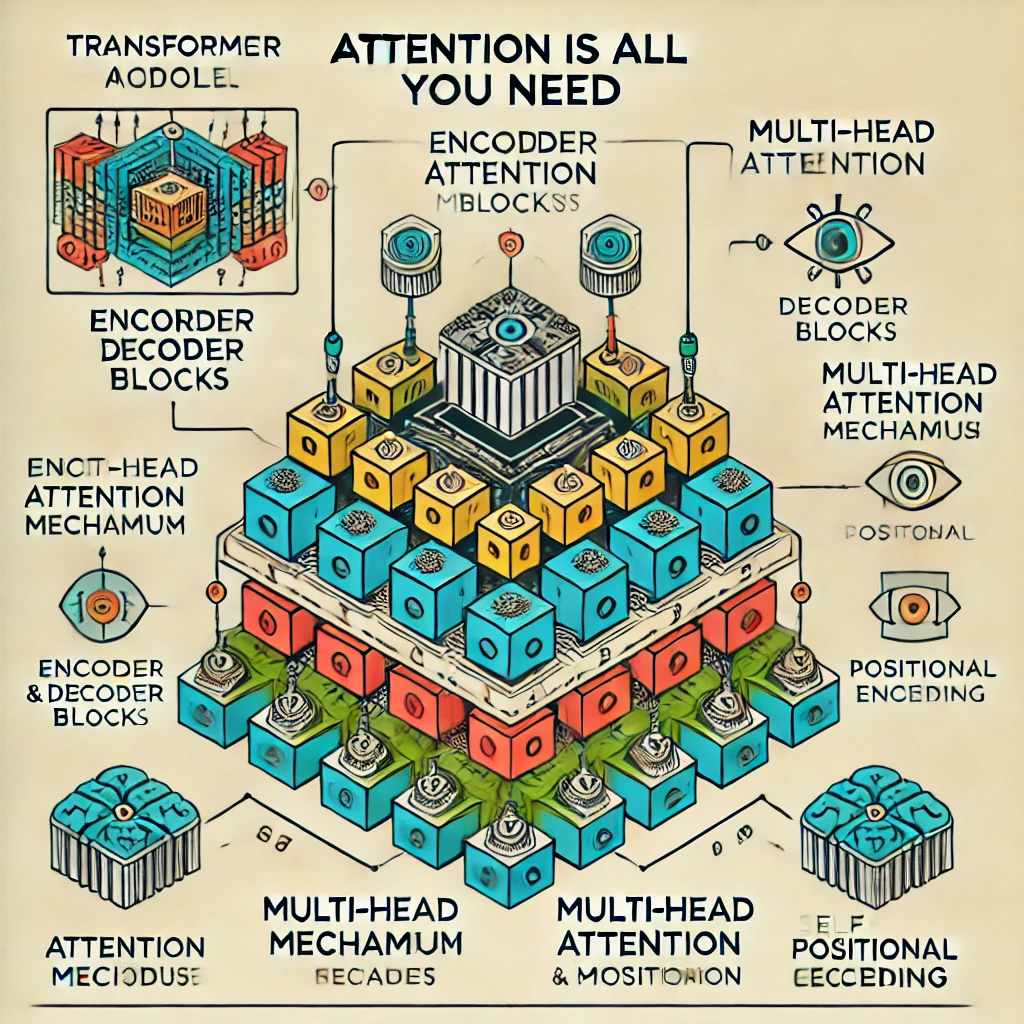

- Sequence Transduction: Transforming input sequences into output sequences, such as in machine translation.

- Self-Attention: Mechanism that allows modeling of dependencies between different positions in a sequence, regardless of their distance.

- Transformer Model: A neural network architecture that uses self-attention mechanisms without recurrence or convolution.

- Preliminary Theories: The paper builds upon existing work on attention mechanisms in neural networks, particularly in the context of machine translation and sequence modeling.

- Contextual Timeline:

- 2014: Introduction of neural machine translation models using RNNs (Sutskever et al.).

- 2015-2016: Development of attention mechanisms to improve sequence transduction models (Bahdanau et al., Luong et al.).

- 2017: Introduction of the Transformer model (Vaswani et al.).

- Prior Research: Significant previous studies include the development of neural machine translation models using recurrent and convolutional networks, as well as the incorporation of attention mechanisms to enhance these models.

- Terminology:

- BLEU Score: A metric for evaluating the quality of text that has been machine-translated from one language to another.

- Positional Encoding: A technique used to inject information about the position of tokens in a sequence into the input embeddings.

- Essential Context: The paper was likely influenced by the increasing demand for more efficient and scalable models for sequence transduction tasks, as well as the limitations of existing recurrent and convolutional networks.

Methodology

- Research Design & Rationale:

- Type: Experimental, with a focus on developing and evaluating a new neural network architecture.

- Implications: The methodology allows for the evaluation of the Transformer model’s performance compared to traditional models in sequence transduction tasks.

- Reasoning: The authors chose this design to empirically demonstrate the advantages of self-attention mechanisms over recurrence and convolution.

- Participants/Subjects: Not applicable, as the study is based on machine learning models and datasets.

- Instruments/Tools: The study uses the Transformer model implemented in TensorFlow, with experiments conducted on the WMT 2014 English-German and English-French translation datasets.

- Data Collection:

- Process: The authors trained and evaluated the Transformer model on standard machine translation benchmarks.

- Locations: The experiments were conducted using GPUs at Google Brain.

- Duration: Training times varied, with the base model trained for 12 hours and the big model for 3.5 days.

- Controls: The experiments included comparisons with previous state-of-the-art models and ablation studies to evaluate the importance of different components of the Transformer.

- Data Analysis Techniques:

- Techniques: The authors used BLEU scores to evaluate translation quality and compared training times and computational costs.

- Software: TensorFlow was used for model implementation and training.

- Rationale: The chosen techniques and software are standard in the field of machine translation and allow for a fair comparison with previous work.

- Ethical Considerations: Not specifically mentioned, but standard research ethics in machine learning apply.

- Comparison to Standard: The methodology adheres to standard practices in evaluating machine translation models, with the added innovation of using self-attention mechanisms.

- Replicability Score: 9/10. The study provides detailed descriptions of the model architecture, training procedures, and evaluation metrics, making it relatively easy for other researchers to replicate the experiments.

Main Results/Findings

- Metrics:

- BLEU Score: A key metric for evaluating translation quality.

- Training Time: The duration required to train the models.

- Computational Cost: The number of floating-point operations used during training.

- Graphs/Tables:

- Figure 1: Model architecture of the Transformer.

- Table 1: Comparison of complexity, path length, and operations for different layer types.

- Table 2: BLEU scores and training costs for different models on the English-to-German and English-to-French translation tasks.

- Table 3: Variations on the Transformer architecture and their impact on performance.

- Outcomes: The Transformer model achieves state-of-the-art BLEU scores on the WMT 2014 English-to-German and English-to-French translation tasks, outperforming previous models by a significant margin.

- Data & Code Availability: The code used to train and evaluate the models is available on GitHub.

- Statistical Significance: The improvements in BLEU scores are statistically significant, demonstrating the superiority of the Transformer model.

- Unintended Findings: The study also found that the Transformer model generalizes well to other tasks, such as English constituency parsing, without task-specific tuning.

Authors’ Perspective

- Authors’ Views: The authors believe that the Transformer model’s reliance on self-attention mechanisms represents a significant advancement in sequence transduction models, offering superior performance and efficiency.

- Comparative Analysis: The authors’ interpretations are consistent with previous work on attention mechanisms, but they extend this work by demonstrating that self-attention alone can achieve state-of-the-art results.

- Contradictions: There are no apparent contradictions in the authors’ discussion of the results.

Limitations

- List:

- The study does not explore the full potential of the Transformer model for other tasks beyond machine translation and English constituency parsing.

- The impact of different hyperparameter settings is not exhaustively explored.

- Mitigations: The authors acknowledge these limitations and suggest future research directions to address them.

Proposed Future Work

- Authors’ Proposals:

- Extend the Transformer model to problems involving input and output modalities other than text, such as images, audio, and video.

- Investigate local, restricted attention mechanisms to efficiently handle large inputs and outputs.

- Explore making generation less sequential to further improve efficiency.

References

- Notable Citations:

- Bahdanau, D., Cho, K., & Bengio, Y. (2014). Neural machine translation by jointly learning to align and translate. CoRR, abs/1409.0473.

- Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence learning with neural networks. In Advances in Neural Information Processing Systems (pp. 3104-3112).