This is my reading notes for Chapter 14 in book “System Design Interview – An insider’s guide (Vol. 1)”.

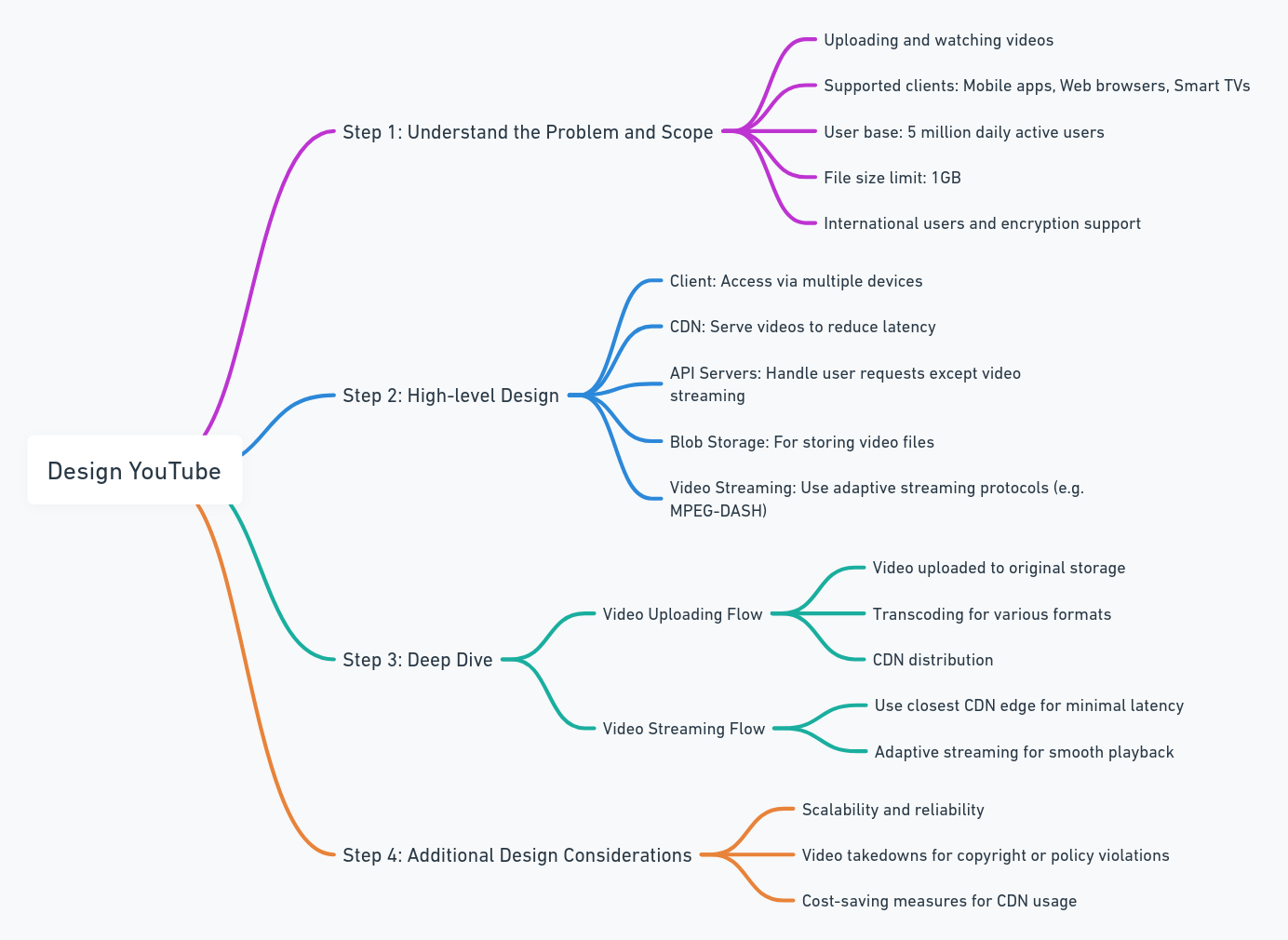

Designing YouTube presents a unique challenge because of the platform’s massive scale and complexity. The system must handle millions of users, with billions of videos being uploaded, viewed, and streamed worldwide. The chapter explains how to approach designing such a system by breaking it down into several components, focusing on scalability, video storage, transcoding, streaming, and cost efficiency.

Key Components

The YouTube system can be broken down into three core components:

- Client-Side: Devices where users upload or watch videos (computers, smartphones, tablets, smart TVs, etc.).

- CDN (Content Delivery Network): Efficient distribution of video files by caching them in geographically distributed servers.

- Backend API Servers: Handles video uploads, user interactions (like, comment, share), recommendations, metadata updates, and other non-streaming functions.

Step-by-Step Breakdown

1. Video Upload Flow

When a user uploads a video to YouTube, several processes take place to ensure the video is available for playback across various devices and network conditions.

Detailed Steps:

- Uploading to Original Storage:

- Users upload a video file to the original storage server. Given the large size of videos (sometimes exceeding 1GB), the upload process is optimized to handle large file transfers smoothly.

- Example: A user uploads a 500MB video. The system receives the raw video and temporarily stores it in blob storage (e.g., Amazon S3 or Google Cloud Storage).

- API Servers Update the Metadata Database:

- While the video file is being uploaded, API servers update the video metadata (title, description, tags, etc.) in the database. This allows users to interact with the video even before it becomes fully available for streaming.

- Example: The title “My Summer Vacation” and tags such as “travel” and “beach” are saved in the database alongside the video’s unique ID.

- Transcoding the Video:

- Once the video upload is complete, the video file is sent to transcoding servers. These servers encode the video into multiple resolutions (e.g., 144p, 360p, 720p, 1080p) and formats (e.g., MP4, HLS, MPEG-DASH) to ensure it can be streamed across various devices.

- Example: A 1080p video is transcoded into 144p for users with low-bandwidth networks and 1080p for high-definition devices. The transcoded videos are stored in blob storage for streaming.

- CDN Distribution:

- The transcoded videos are copied to CDN servers, which are geographically distributed to ensure low-latency video playback. CDNs cache video files and serve them from the closest server to the user, minimizing delay.

- Example: A user in New York streams the video from a CDN server located on the East Coast, while a user in Europe streams from a CDN server in Amsterdam.

- Video Processing Completion:

- Once all the video formats are processed and stored in the CDN, the video is marked as available. Users can start watching the video across different devices and resolutions.

2. Video Streaming Flow

Streaming video in real-time is one of the biggest challenges in designing YouTube. The platform uses adaptive streaming to deliver different video qualities based on the user’s internet connection.

Detailed Steps:

- Client Requests for Video:

- When a user clicks on a video, their device sends a request to the backend API. The server returns a video playback URL from the CDN.

- MPEG-DASH and HLS Streaming:

- YouTube uses modern streaming protocols like MPEG-DASH and HLS (HTTP Live Streaming). These protocols break the video into small chunks (segments) and serve them one at a time based on the network speed.

- Example: If a user has a slow internet connection, YouTube will start with 144p chunks, but as bandwidth improves, it will automatically switch to higher quality, such as 360p or 720p.

- Content Delivery Network (CDN):

- The CDN server closest to the user streams the video. By caching video content across multiple locations, the CDN reduces latency and improves the overall streaming experience.

- Example: If a user watches a popular video like “Top 10 Best Moments in Football,” CDN servers will already have this video cached due to its popularity.

- Handling Buffering and Resilience:

- YouTube employs multiple techniques to reduce buffering. This includes prefetching the next few video segments to ensure continuous playback, even if network conditions temporarily degrade.

- Example: If the user’s connection weakens, the system can downgrade the video quality to 144p or 240p instead of pausing the video.

Key Design Considerations

1. Video Transcoding

- One of the most resource-intensive processes is transcoding videos into multiple formats and resolutions. YouTube must balance quality with bandwidth efficiency.

- Example: Videos are transcoded to support devices with varying capabilities—from older mobile phones with limited processing power to 4K-capable TVs.

2. Metadata Management

- Managing metadata (such as video titles, descriptions, tags, user information) is essential for efficient video retrieval and recommendations.

- Example: The metadata for a viral video includes trending tags and search terms that allow the recommendation system to highlight the video to relevant users.

3. Handling High Traffic

- With millions of users watching videos simultaneously, load balancing is critical to distributing requests across multiple servers.

- Example: When a popular event, like a live stream of a sports game, takes place, load balancers ensure that no single server is overwhelmed by distributing traffic efficiently.

4. Cost Efficiency through CDNs

- The chapter emphasizes minimizing CDN costs, which can become substantial at YouTube’s scale. Some strategies to achieve this include:

- Serving only popular videos from CDN.

- On-demand encoding for less popular videos (reducing unnecessary processing costs).

- Edge caching to reduce the need for central servers to serve content to distant users.

- Example: YouTube stores viral videos like “Despacito” on CDNs near major user hubs (e.g., New York, London), ensuring quick access for users and reducing the strain on the main servers.

5. Error Handling and Reliability

- The system must handle various types of errors, including transcoding errors, file upload issues, and network timeouts.

- Example: If a video fails to transcode due to an unsupported format, the system retries transcoding and alerts the user of the delay.

Challenges and Trade-offs

- Balancing Video Quality and Bandwidth

- Delivering high-quality videos (e.g., 1080p, 4K) requires significant bandwidth, but too much demand could overwhelm the system. Therefore, the system needs to balance quality and bandwidth use effectively.

- Example: YouTube employs adaptive bitrate streaming to dynamically adjust the video quality based on the user’s internet connection, ensuring optimal viewing without causing bandwidth overload.

- High Availability

- YouTube’s architecture must be designed with high redundancy to ensure that services remain available, even in the event of hardware failure or regional network outages.

- Example: Multiple redundant copies of popular videos are stored in various CDNs around the globe. If one server fails, another can serve the video without interruption.

- Scalability

- YouTube must handle millions of simultaneous users, which requires horizontal scalability at every tier—API servers, databases, CDNs, and storage.

- Example: As the number of active users increases during a major live stream, additional API servers can be spun up to manage the increased load.

Key Takeaways

- Adaptive Streaming Is Key: To support users with varying bandwidth, YouTube uses adaptive bitrate streaming to ensure that videos are always available in the highest possible quality for each user.

- Scalability Is Paramount: YouTube’s architecture is designed to scale horizontally across all components. CDN caching, load balancing, and efficient metadata management ensure that YouTube can handle billions of requests per day.

- Cost Efficiency Through CDN Optimization: CDNs are essential to reducing latency, but they can also be expensive. Serving only popular videos through CDNs and using strategies like edge caching can significantly reduce costs.

- Error Handling: The system must handle errors gracefully, with retry mechanisms for video uploads and transcoding and appropriate user feedback in case of failures.

These detailed notes provide a comprehensive understanding of YouTube’s system design, focusing on both the technical architecture and the trade-offs involved in building a video-sharing platform at such a massive scale.